Failing Selenium tests – SOLVED!6 min read

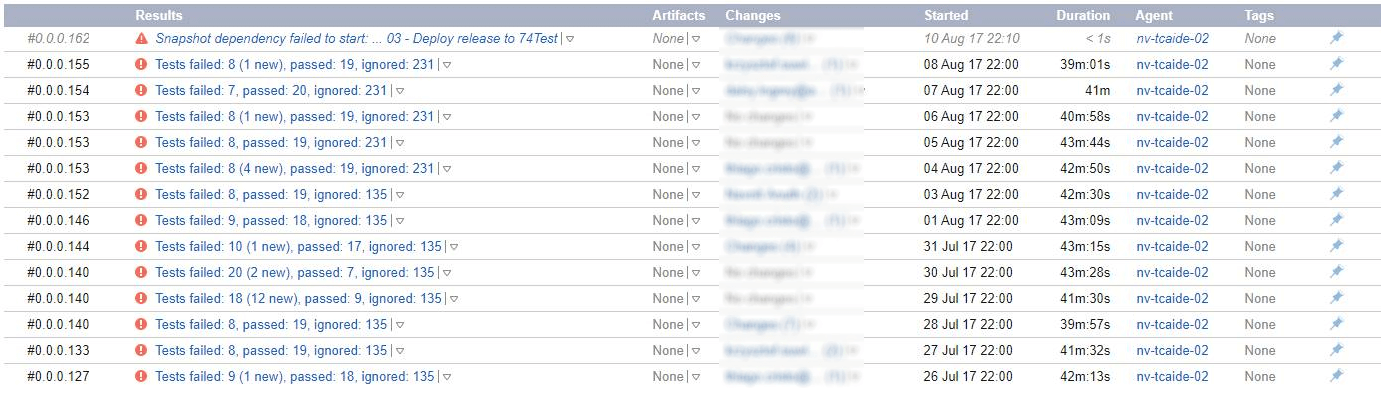

Just under a year ago the team and I made the bold decision to delete our existing suite of automated browser test. The suite was your traditional web test framework – SpecFlow, Selenium WebDriver, Page Object Model. I could explain at length how we came to decommission the tests but I think this picture does a pretty decent job of explaining:

In short, the framework was bloated, full of fragile tests (many of which were ignored), the developers were waiting over 40 minutes for feedback, the testers were tired of fixing failing tests and everyone was fed up with red builds. Before making the decision to deprecate the old framework we did review the code and we saw all the traditional poor coding traits – lots of duplication, lack of consistent patterns or naming conventions, huge classes doing far too much, too few layers of abstraction, tightly coupled dependencies, static objects preventing tests from running concurrently… the list goes on.

The plan

We began by decommissioning the old framework (removing the tests from the CI build) and then started working on a replacement suite of end-to-end smoke tests, with the intention of resolving a number of the design issues with the old framework and in-time, replacing the existing test suite altogether.

Naturally, this did present us with the problem of having no automated coverage of the UI or end-to-end journeys, so in the short-term, we had to accept more manual regression checking before releasing, but that was just something we were going to have to live with.

So what next?

We began by reviewing all of the feature files in the existing test pack and classifying each of them (out of scope; add to new end-to-end test suite; move to lower level test suite, e.g. service, component or unit), and documented the actions as Features and Stories in the team’s backlog.

We then ran a workshop with the Business Analysts and Solution Architect to review the coverage, gather feedback and prioritise them based on business criticality.

The result

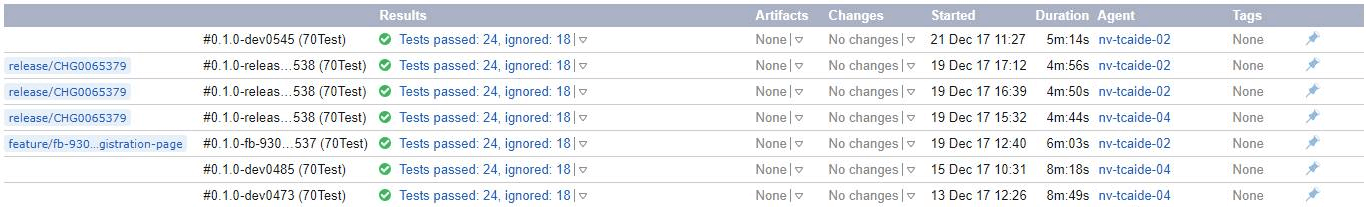

Fast forward a few months and eventually, we reached a momentous occasion. Our new smoke pack was finally merged in and running as part of the CI pipeline and all tests were GREEN!!!

In the first couple of weeks of running the tests, they had already begun offering value and has helped to identify a number of bugs. What’s more, several months on, the tests are still running and passing consistently, and only failing when a genuine bug is introduced.

So how was this achieved and what lessons did we learn along the way?

Test coverage

The UI regression pack was overhauled, with the fragile UI test framework being replaced by a shiny new smoke pack. The new suite covers just the core end to end web user journeys (currently 24 scenarios), just 10% of the tests in the original framework. There are a few tests still to be implemented, but on the whole, this pack covers our core user journeys.

We chose only to include the full user journeys that truly needed to be run through the browser, everything else was pushed to lower level tests, allow them to run earlier in the pipeline, with few dependencies. This provided much faster feedback and closer alignment to the automation test pyramid.

Framework design

At its heart, the framework is built on Selenium WebDriver v3 and runs using NUnit and SpecFlow and the feature files are described in third-person business domain language to allow for better business collaboration.

Tests have been designed for robustness and stability, but are also efficient and configured to run in parallel. Total execution time is around 5 minutes. They currently run against Google Chrome (Windows OS) in SauceLabs (our cloud cross-browser testing platform) to provide a consistent operating platform and to improve stability. They have also been configured to run in our TeamCity CI pipeline against our SIT environment.

We have done away with the Page Object Model and PageFactory, and have adopted the Screenplay pattern (formerly the Journey pattern), to maximise maintainability and code reuse. This pattern introduces more layers of abstraction and offers a more SOLID approach.

In order to create the new framework, we also abstracted some of the common functionality into three standalone packages. The advantage of using these packages is that the test project itself is very lightweight. In addition, the packages have been open-sourced within the organisation, allowing other teams to adopt them and meaning that they are now being maintained and contributed to by the wider engineering community. These packages have also be configured to run in TeamCity pipelines and with unit and acceptance tests, meaning that they are stable and easy to maintain (more on this here – Tests for your tests).

Finally, Slack notifications have been configured to post build failures to the team channel, so everyone in the team is aware when there is an issue to be investigated.

Next steps

After going live with the new framework we made the following agreements and added them to the team’s charter:

-

Keep tests green, as if our lives depended on it!

-

Keep code quality high, as if our lives depended on it!

-

Only add tests that truly need to be tested through the browser

-

Keep total test execution time below 10 minutes

We also added some additional stories to the team backlog to ensure we continue to improve the framework and increase coverage. This will help to minimise the need for manual release testing and will enable us to continue our push towards fully automated Continuous Deployments.

Lessons learned

Having been through this journey here are the lessons we learnt, and some things to consider should you face a similar situation:

- Start with the end in mind – Define what “good looks like” up front. How many tests will you have (ratios work better than exact numbers)? How quickly will they run? Where will they run?

-

Communicate – Be open with your team before making bold decisions like decommissioning a whole suite of tests, listen to their concerns and seek their advice

-

Stakeholders – Seek buy-in from your stakeholders so everyone knows what is happening, the impacts and the value that it will bring

- Single responsibility principle – Separate common functions into independent libraries/packages and enable greater code reuse

-

Open-source – Adopt an open-source model for common frameworks to allow multiple teams to collaborate and benefit from each other’s contributions

-

Test code is still code – Apply the same care, practices and design principles to your test code as you would with your production code

-

Test your tests – Unit testing your test libraries might seem like overkill, but actually, they allow you to add features and fix bugs with greater speed and confidence

-

Pairing – Pair-programming, code reviews and pull requests are a vital part of any software development project and automation frameworks are no different. They help to share knowledge, increase code quality and instil comradery

-

Visible failures – Publish test reports and highlight failures

-

Root cause – Get people talking about failures and identify the root cause as soon as possible. Bring the whole team together around a common cause and avoid a “them and us” divide within the team

-

Measure value – When your automated checks uncover bugs, raise them and tag them so you can keep track of what kind of bugs are being caught and the value they are bringing

-

Publicise – Shout about your successes (and failures) so that yourself and others can learn from them

Hi! I could have sworn I’ve been to this blog before but after looking at

a few of the posts I realized it’s new to me. Regardless,

I’m definitely happy I came across it and I’ll be bookmarking it and checking back frequently!