The do’s and don’ts of integration testing9 min read

TL/DR: Get the biggest bang for your buck with integration tests that are fast, reliable and easy to maintain.

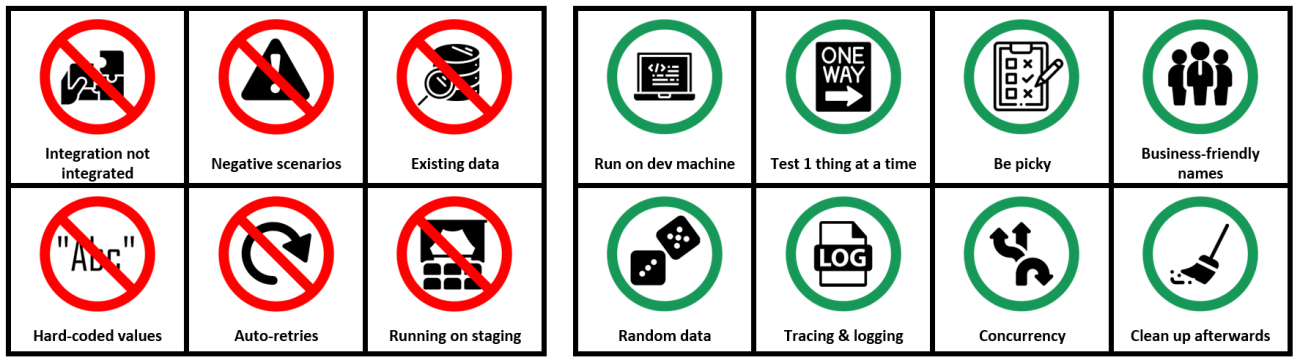

Don’ts

I should first emphasise that I fully appreciate the value of well-designed integration tests. Despite the benefits, there are still some common pitfalls that can result in unstable and difficult to maintain tests.

Don’t confuse integrated and integration tests

A common misunderstanding is between integrated and integration tests. Integrated tests are designed to exercise multiple services or applications working together in an “integrated” way. Typically, these verify end-to-end customer journeys or business flows through a system, and are run on a staging or pre-production environment

In contrast, integration tests are designed to verify the correctness of a single application or service running on a development machine or on a dedicated test environment.

Be sure to know the difference and use the right tests for the task at hand.

Don’t test for negative scenarios

All applications must be able to handle both good and bad user inputs. They must also be resilient to all manner of transient errors such as a loss of network connectivity, database timeouts and broken dependencies. None of these scenarios, however, are good candidates for integration tests.

Because integration tests are out-of-process tests, simulating error scenarios requires significant additional set up. This may include complex data fixtures, specific application configuration or building custom stubs that behave differently depending on the inputs they receive. This can vastly inflate the time and effort required to write integration tests and means that the tests become heavily reliant on this external set up. This makes the tests difficult to maintain and can result in instability and flakiness.

In addition, when tests require lots of environmental set up, they tend to take longer to run and parallel execution becomes problematic.

Integration tests work best when they are focused on positive scenarios, with known input conditions and deterministic outcomes.

Error handling and retry logic should instead be tested using in-process unit or component test. These tests are faster to run and can benefit from using in-memory mocks to replace external dependencies. This makes it easy to simulate failure scenarios, without having to build custom stubs.

Don’t rely on existing data

A common mistake with integration testing is assuming that certain data will always exist in the application’s database. It may be convenient to assume that a certain customer or product will be present but when the data changes, the tests may become unreliable. This is particularly important when running on shared test environments where other developers and testers have access.

In an ideal world, it should be possible to deploy an application to a clean environment and run the integration tests without having to perform any manual steps to seed the database. In order to achieve this, integration tests should seed the data they rely on before they run and if possible, remove it afterwards (more on this later).

Don’t use hard-coded values

When creating data fixtures or choosing input data, using hard-coded values may appear to be the simplest solution. This can work well for an individual test, run in isolation, but can cause problems when tests are repeated.

For instance, the first time the test runs database entries may be saved by the application, based on the inputs from the test. When the test runs a second time using the same input data, the database entries will already exist. Depending whether your application is idempotent (what? check this video for a quick explanation), your test may still pass. However, the application may actually be doing something slightly different “under the covers”. This means your test isn’t testing what you thought it was and it could be masking some unexpected behaviour.

Similarly, if the same test is run by multiple people (or multiple pipelines) at the same time, a race-condition may occur, resulting in a failing test, without a clear or obvious reason.

Often the safest approach is to use randomised input data. Using GUIDs, timestamps or random number generators can be a quick fix. For more complex input data, tools such as AutoFixture can do a lot of the hard work for you.

Don’t use auto-retries

We all want our tests to pass. A passing integration test tells us that our application is up and running and gives us confidence that it’s ready for release. In this endeavour for green tests, it can be tempting to use automatic retries when the first attempt returns an error or times out.

The problem with this approach is that it can hide issues and bugs, giving you a false sense of confidence.

If the application suffers from transient issues or cold-start slowness, it’s far safer to fix the system and underlying issue, making it more resilient. Rather than glossing over the failures with a test retry.

Don’t run on staging

Running integration tests on your staging or pre-production environment may seem like a no-brainer. From experience, however, running integration tests in fully integrated environments can cause more harm than good. Because integration tests often use fake or nonsensical data, tests on one application can often lead to unanticipated errors in downstream apps. These errors can be troublesome to diagnose and can distract from genuine bugs elsewhere.

Well-designed integration tests should be able to run against your local machine or your test environment, thus verifying the correctness and stability of the application during development.

Providing a comprehensive set of automated end-to-end or user-acceptance tests can be run on the higher environments, it is unlikely that running integration tests on staging will provide any additional benefit.

Do’s

The list of don’ts should help you to design a set of stable, reliable integration test. The following list of do’s provides a set of good practices to help you to maximise your return on investment:

Do run on your dev machine

The best automated tests are fast, reliable and require little or no set up. These tests can be run throughout the day to provide regular feedback on the stability of the codebase.

If you are fortunate enough to be able to deploy your application to your local development machine, then make it a habit to deploy periodically and run your integration tests straight away.

Running applications locally can be challenging, especially if external dependencies need to be provisioned or configs need to be transformed. If possible, script these steps so you don’t have to spend time manually configuring your app before running the tests.

If running your app locally is not an option, deploying to your test environment regularly and running the integration tests in your CI/CD pipeline or directly from your IDE can be a good second best.

Do test one thing at a time

As with all automated tests, it is advisable to test a single operation with each test. Combining lots of things into a single test may seem efficient when writing them but can cause problems later on. Tests with a small scope are quick to debug and make failures easier to reproduce and investigate.

Do be picky

No test is free to write, and all tests require maintenance from time to time. It is wise to be selective over which integration tests you create. A hundred tests are not one hundred times better than one test and there is always a point of diminishing returns.

Focus on positive scenarios and try to use the fewest number of tests for the greatest value and coverage.

Do use business friendly names

Integration tests should be written with the end user in mind. Try to use domain language and describe the behaviour, not the implementation. Personally, I’m a fan of Vladimir Khorikov’s naming convention as he sets out in his excellent blog post – You are naming your tests wrong!.

Do use random data

I’ve already discussed the pitfalls of using hard-coded test data and input values. Furthermore, using randomised inputs can increase your test coverage at little extra cost.

By randomising inputs within the range of valid values, your tests will be testing different variations and combinations on every run. Care must be used to avoid reducing the determinism of the test but at the same time, a random set of inputs can identify obscure bugs that may overwise go undetected.

If you do use randomised data fixtures or inputs, be sure to log them to the console. This will help you to reproduce any issues you uncover.

Do use tracing and logging

Given that integration tests run out-of-process they are intrinsically difficult to investigate when they fail. A small amount of logging can go a long way to help debug failures. Log verbosely and consistently. You never know when you might need it.

A personal favourite is to use a custom handler to log all HTTP requests and responses.

A great tip from @stphn28 is to include a Correlation ID header when sending HTTP requests or raising events in your integration tests. These are another useful tool for investigating issues when tests fail.

Do design for concurrency

Integration tests are, by their very nature, slower to execute than unit or component test. This makes it even more important to limit the number of tests you write and, where possible, to run them in parallel.

Parallel execution comes out-of-the-box with most modern testing frameworks, but this doesn’t mean that concurrency comes for free.

In order to run tests in parallel they must be designed carefully not to clash or cause race-conditions. This can be made easier by following the aforementioned practices – focusing integration tests on positive scenarios, using random inputs, not relying on existing data and limiting the scope will all help to keep tests deterministic and able to run concurrently.

Do clean up afterwards

If a test inserts data fixtures, it is generally a good practice to delete the data afterwards and return the application to its initial state. If the application makes this easy by providing deletion APIs then it might be a no-brainer. However, if your only way to delete the data is by directly accessing the database, then it may be advisable to leave well alone. In this situation restoring from a backup may be a better solution.

If possible, make use of time-to-live (TTL) settings to automatically clean up data or infrastructure (message bus subscriptions etc) after a fixed time period (e.g. one hour). This can be better than using a tear down method, as the clean-up will still happen if the test doesn’t run to completion. If you rely on tear down methods and the test is cancelled prematurely, then the application may be left in an unknown state that causes subsequent tests to fail.

Summary

When designed well and chosen selectively, integration tests can be invaluable. Good integration test suites are reliable, quick to write, easy to maintain and give rapid feedback on the correctness and stability of the application under test, giving you the confidence needed deploy. Don’t be caught out by the common pitfalls and maximise the return on your testing investment by following the do’s and don’ts above.